The InstCombine Pass

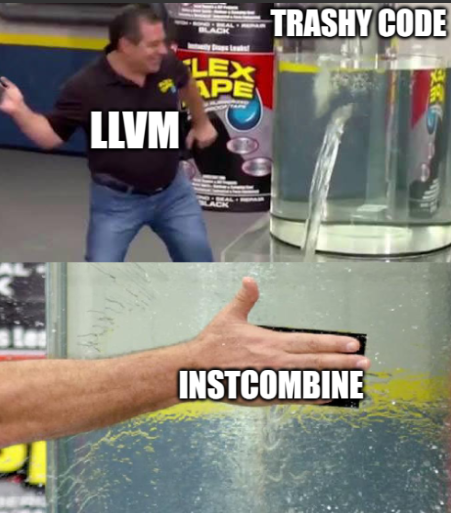

LLVM’s InstCombine pass is one of those optimization passes that quietly does a massive amount of work behind the scenes, making your IR cleaner, leaner, and, well… a lot less embarrassing.

Let's say you're non-chalantly hitting away those keys and write some really impactful code.

Such as this piece I wrote:

int foo(int x) {

return (x * 2) / 2;

}

A direct translation into LLVM IR would look something like this:

+ define dso_local noundef i32 @foo(int)(i32 noundef %x) {

+ entry:

+ %x.addr = alloca i32, align 4

+ store i32 %x, ptr %x.addr, align 4

+ %0 = load i32, ptr %x.addr, align 4

+ %mul = mul nsw i32 %0, 2

+ %div = sdiv i32 %mul, 2

+ ret i32 %div

+ }

That's fine, looks clean.

Here's how the same looks after one InstCombine Pass:

define dso_local noundef i32 @foo(int)(i32 noundef %x) {

entry:

- %x.addr = alloca i32, align 4

- store i32 %x, ptr %x.addr, align 4

- %0 = load i32, ptr %x.addr, align 4

- %mul = mul nsw i32 %0, 2

- %div = sdiv i32 %mul, 2

ret i32 %div

}

Sacrebleu!

You can view the entire opt pipeline here

This is because InstCombine performs something known as a Peephole optimization.

Peephole optimizations and everything in between...

In the straightforward sense of the word, a peephole optimization is applied on a set of closely located instructions.

+ MOV b, R0

+ ADD c, R0

+ MOV R0, a

- MOV a, R0 ; Optimized away

+ ADD e, R0

+ MOV R0, d

But since compilers use SSA based IR(Intermediate Representation), these optimizations can be levied even if the concerned instructions are located far away across basic blocks provided that there is some or of dominance between the instructions.

%a = add %x, 1

...

%b = add %y, 2

...

%c = add %a, %b

Here we say that %c is dominated by %a and %b.

The transformations performed by InstCombine may seem simple at first glance — small algebraic rewrites, local constant folding, and redundant operation elimination. They’re mechanically obvious and visually satisfying — but the real question is, why are they valid? And more importantly, how do they ensure correctness without introducing subtle miscompilations?

To answer this, we must first establish what a peephole optimization should guarantee. The strongest correctness requirement is semantic equivalence: for all possible values of the inputs, the transformed code must preserve the observable behavior of the original code.

But what exactly counts as “observable behavior”?

- The computed result — obviously.

- Memory side effects, if any.

- But not execution time, code size, or microarchitectural artifacts — these are irrelevant to correctness.

- In some cases, security-sensitive applications might care about microarchitectural side effects, but InstCombine operates purely at the IR level and does not explicitly address such concerns.

However, enforcing strict equivalence is not the only valid approach to transformation correctness. Instead, what InstCombine ensures is refinement.

Refinement: The Crux of InstCombine

Refinement, in the compiler context, means removing behaviors that are redundant, unnecessary, or inefficient while preserving correctness. Rather than requiring a one-to-one mapping of transformations, refinement allows for a more constrained, deterministic version of the original computation.

InstCombine applies this principle extensively. Consider an example:

%a = mul i32 %x, 2

is rewritten to:

%a = shl i32 %x, 1

This instruction is not just equivalent, its a refinement. The shl instruction eliminated the non-determinism associated with signed multiplication in certain contexts.

InstCombine: A Localized Refiner

Unlike broader optimization passes that consider global control flow, InstCombine is strictly local. It doesn’t analyze loops, inline functions, or propagate constants across basic blocks—it only applies transformations that can be verified within a single instruction’s scope. This makes it safe but also means it depends on other passes like GVN, SimplifyCFG, and Loop Simplify for full program-wide optimizations.

The Balancing Act 𐄷

InstCombine doesn't blindly apply every possible transformation it knows. Instead, it relies on sophisticated cost models to determine if a change truly improves the code. These cost models represent one of the most nuanced aspects of the pass, walking a tightrope between:

- Reducing instruction count

- Improving execution speed

- Managing register pressure

- Enabling further optimizations down the pipeline

The TargetTransformInfo Interface

At the heart of InstCombine's cost evaluation is the TargetTransformInfo (TTI) interface. This is LLVM's way of abstracting target-specific cost information so that mid-level optimizations like InstCombine can make informed decisions without knowing the intimate details of every target architecture.

if (TTI.getArithmeticInstrCost(Instruction::Mul, Ty) >

TTI.getArithmeticInstrCost(Instruction::Shl, Ty)) {

// Replace multiplication with shift if shift is cheaper

// ...

}

The TTI provides methods like:

getArithmeticInstrCost: Estimates the cost of arithmetic instructionsgetMemoryOpCost: Evaluates memory operation costsgetCastInstrCost: Determines the expense of type conversionsgetVectorInstrCost: Assesses vector instruction efficiency

But Order Matters...

%a = add i32 %x, 0 ; This simplifies to just %x

%b = mul i32 %a, 2 ; This could become mul i32 %x, 2

%c = add i32 %b, %b ; This could become mul i32 %b, 2

If we process these instructions in order:

%a = add i32 %x, 0simplifies to just%x%b = mul i32 %x, 2(after substituting%awith%x)%c = add i32 %b, %bcould simplify to%c = mul i32 %b, 2, which is%c = mul i32 %x, 4

The task of InstCombine is pretty simple - Visit instructions and transform them into simpler alternatives. But there is the possibility that one transformation opens the possibility to further transformations.

To handle this InstCombine makes use of the worklist algorithm. Initially all the instructions are added to the worklist. Each instruction is visited one by one and transformed if necessary. If so, the instruction is added back to the worklist along with any dependant instructions for further processing.

Even this isn't sufficient in all cases since certain transforms can diverge from normal behaviour and fail to maintain the worklist correctly. InstCombine adds an additional fixpoint iteration which checks for any changes made. If there are changes, it would restart and begin to fold instructions again until a fixpoint(no new changes) is attained.

In many cases InstCombine reaches the FixPoint in one iteration. However it needs to run another iteration to confirm the fixpoint. This leads to non-trivial performance impact on the compiler.

"instcombine.NumOneIteration": 411380,

"instcombine.NumTwoIterations": 117921,

"instcombine.NumThreeIterations": 236,

"instcombine.NumFourOrMoreIterations": 2,

As seen from this testcase, LLVM performs 22.3% useless fixpoint iterations just for verification purposes. Due to this the fixpoint iteration was recently removed from LLVM altogeather.

If you found that interesting you should definitely give this a read!

The InstCombine Family

The prodigal InstCombine pass is infact a part of a larger family of passes which share similar design goals and work on similar patterns. Personally one of the most confusing parts of writing a transform is to figure out which pass it should fall under.

The InstCombine Family:

- ConstantFolding: Evaluates instructions with constant operands at compile time

- ValueTracking: Analyzes instructions to derive facts about values

- InstructionSimplify: Performs "zero-new-instruction" simplifications

- InstCombine: Implements general instruction combining transformations

- AggressiveInstCombine: Houses more expensive or specialized transformations

- VectorCombine: Focuses on vector-specific optimizations with target awareness

We shall now dissect these passes one by one, lest ye place thine optimization in an unworthy home and incur the dreaded wrath of LLVM’s gatekeepers🐉.

ConstantFolding

If an instruction has only constant operands, this pass will eagerly evaluate it and replace it with a constant. Pretty basic stuff, but it’s fundamental to keeping IR clean and reducing redundant computations.

Basically:

- If every operand is constant, fold the operation.

- No side effects.

- Doesn't modify the IR structure, just replaces values.

- Doesn't try to be fancy—leave the complex reasoning to other passes.

; Before constant folding

%result = add i32 5, 3

; After constant folding

%result = i32 8

Magick!

Use ConstantFolding when:

- You’re dealing with an instruction that has only constant operands.

- The operation has no side effects.

- You think LLVM should have already optimized it, but *surprise*—it didn’t.

ValueTracking

This pass exists purely to analyze values. It doesn’t modify IR, doesn’t simplify anything—it just answers questions like:

- Is this value always non-zero?

- What bits in this integer are guaranteed to be zero?

- Can we prove this value is always in a certain range?

Basically:

- No transformations—this is a library, not an optimization pass.

- Fast analysis only—anything expensive goes elsewhere.

- Used by a bunch of other optimizations to make better decisions.

ValueTracking implementation can be found at llvm/lib/Analysis/ValueTracking.cpp. To understand ValueTracking viscerally, let's walk through an optimization using ComputeNumSignBits.

For instance, take the following IR,

; Starting with a byte (i8)

%a = add i8 -1, 0 ; %a = 11111111

%b = ashr i8 %a, 2 ; %b = 11111111

In this case, ComputeNumSignBits(%a) would return 8 because all bits are sign bits (all 1s). When InstCombine sees the ashr instruction, it calls ComputeNumSignBits to check if the shift is necessary.

Since we know all 8 bits are sign bits, shifting right by 2 won't change anything - we'll still have all 1s. InstCombine can then eliminate the ashr instruction entirely and just use %a directly.

InstSimplify

This pass is for simplifications that don’t create new instructions. That’s it. If you need to create a new instruction, you’re in the wrong place. Go to InstCombine.

Basically:

- You can replace instructions, but you cannot create new ones.

- The simplification must always be beneficial.

- Idempotent: running it twice does nothing new.

Instead of beating the dead horse, direct your attention to the following example.

; Example 1: Redundant operations that instsimplify should handle

define i32 @test_instsimplify(i32 %a, i32 %b) {

entry:

; Add zero (should simplify to %a)

%add_zero = add i32 %a, 0

; Multiply by one (should simplify to %b)

%mul_one = mul i32 %b, 1

; xor with zero (should simplify to %a)

%xor_zero = xor i32 %a, 0

; Comparison that's always true

%always_true = icmp ule i32 0, %a

%select_val = select i1 %always_true, i32 %a, i32 0

; OR with zero (should simplify to %b)

%or_zero = or i32 %b, 0

; AND with all ones (should simplify to %a)

%and_ones = and i32 %a, -1

; Trivial PHI with one predecessor

br label %next

next:

%phi_simplify = phi i32 [ %add_zero, %entry ]

; Combine some of these

%result = add i32 %phi_simplify, %select_val

ret i32 %result

}

Now give it a whoosh of InstSimplify,

opt -passes=instsimplify -S in.ll -o out.ll

And Voilà!

; ModuleID = 'in.ll'

source_filename = "in.ll"

define i32 @test_instsimplify(i32 %a, i32 %b) {

entry:

br label %next

next: ; preds = %entry

%result = add i32 %a, %a

ret i32 %result

}

AggressiveInstCombine

AggressiveInstCombine handles transformations that are too expensive or specialized for regular InstCombine. It focuses on patterns that require more complex analysis or have higher computational costs.

VectorCombine

VectorCombine is responsible for optimizing vector operations when the target architecture actually benefits from it.

Blurred Lines

Sometimes the boundaries between these passes are not clear-cut, and optimizations that logically belong in one place might end up in another for practical reasons:

InstCombine vs InstSimplify

Sometimes, folds that logically belong in InstSimplify are implemented in InstCombine because:

They're too expensive for InstSimplify's context (which is called from many places) They have both simplifying and non-simplifying cases, making it cleaner to keep them together The pattern matching is more convenient in InstCombine's framework

For example, consider a fold that usually returns an existing value but occasionally needs to create a new instruction. Keeping both cases in InstCombine is simpler than splitting the logic.

Further Reading

Some great resources to learn about InstCombine: